Data set synthetization to improve deep learning

- Contact:

Roman Bruch

- Project Group:

Machine Learning for High-Throughput Methods and Mechatronics (ML4HOME)

- Funding:

BMBF

- Partner:

Mannheim University of Applied Sciences, Merck Group

- Startdate:

2022-02-01

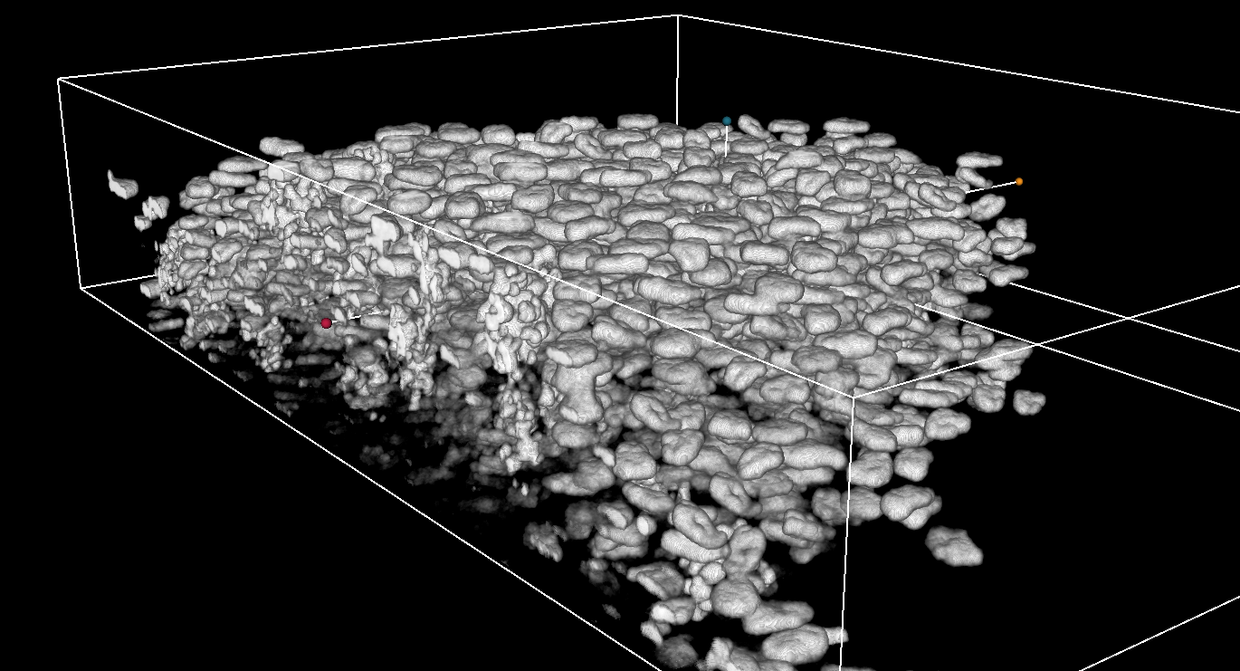

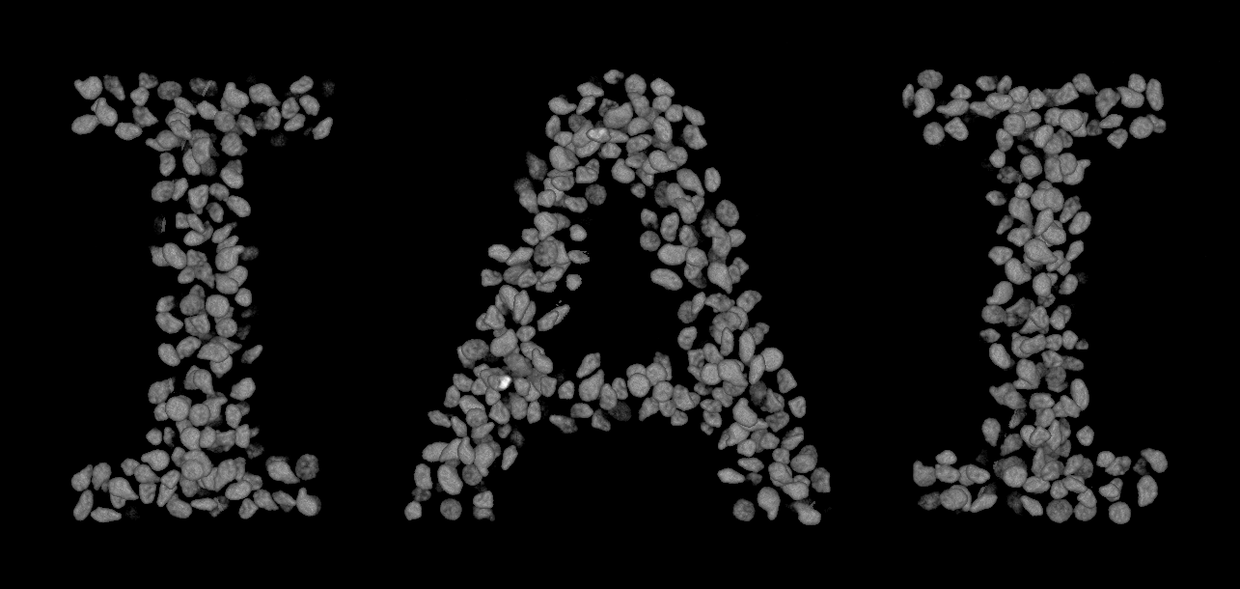

A common drawback of deep learning algorithms is their need for large, annotated datasets for training and evaluation. In case of 2D images, the manual annotation is often just seen as a burdensome and time-consuming task. However, the annotation of higher dimensional data such as 3D or 3D+t images introduce new difficulties which. The visualization and annotation are mainly limited to 2D views, as the rendering of large 3D structures with many instances is not very useful. The additional dimensions thus lead to a severe increase in the amount of data to be annotated. Furthermore, the spatial recognition of objects is more difficult and the generation of consistent object outlines between layers is not feasible. The manual annotation of higher dimensional data is thus a nearly impossible task.

The main goal of the project is to develop and improve methods for the generation of synthetic image data, where labels are known by design. Important aspects of this research are the inclusion of rare object states and structures and the consideration of biophysical forces. In addition, methods for evaluating the quality and physical plausibility of the synthetic data are developed.

Publications

Bruch, R.

2025, August 4. Karlsruher Institut für Technologie (KIT). doi:10.5445/IR/1000183485

Bruch, R.; Vitacolonna, M.; Nürnberg, E.; Sauer, S.; Rudolf, R.; Reischl, M.

2025. Communications Biology, 8 (1), Art.-Nr.: 43. doi:10.1038/s42003-025-07469-2

Bruch, R.; Vitacolonna, M.; Nürnberg, E.; Sauer, S.; Rudolf, R.; Reischl, M.

2024. arxiv. doi:10.48550/arXiv.2408.16471

Vitacolonna, M.; Bruch, R.; Schneider, R.; Jabs, J.; Hafner, M.; Reischl, M.; Rudolf, R.

2024. BMC Cancer, 24 (1), Art.-Nr.: 1542. doi:10.1186/s12885-024-13329-9

Nürnberg, E.; Vitacolonna, M.; Bruch, R.; Reischl, M.; Rudolf, R.; Sauer, S.

2024. Frontiers in Molecular Biosciences, 11. doi:10.3389/fmolb.2024.1467366

Nuernberg, E.; Bruch, R.; Hafner, M.; Rudolf, R.; Vitacolonna, M.

2024. 3D Cell Culture. Ed.: Z. Sumbalova Koledova, 311–334, Springer US. doi:10.1007/978-1-0716-3674-9_20

Vitacolonna, M.; Bruch, R.; Agaçi, A.; Nürnberg, E.; Cesetti, T.; Keller, F.; Padovani, F.; Sauer, S.; Schmoller, K. M.; Reischl, M.; Hafner, M.; Rudolf, R.

2024. Frontiers in Bioengineering and Biotechnology, 12. doi:10.3389/fbioe.2024.1422235

Böhland, M.; Bruch, R.; Löffler, K.; Reischl, M.

2023. Current Directions in Biomedical Engineering, 9 (1), 467–470. doi:10.1515/cdbme-2023-1117

Rettenberger, L.; Münke, F. R.; Bruch, R.; Reischl, M.

2023. Current Directions in Biomedical Engineering, 9 (1), 335–338. doi:10.1515/cdbme-2023-1084

Böhland, M.; Bruch, R.; Bäuerle, S.; Rettenberger, L.; Reischl, M.

2023. IEEE Access, 11, 127895–127906. doi:10.1109/ACCESS.2023.3331819

Bruch, R.; Keller, F.; Böhland, M.; Vitacolonna, M.; Klinger, L.; Rudolf, R.; Reischl, M.

2023. PLOS ONE, 18 (3), Article no: e0283828. doi:10.1371/journal.pone.0283828

Bruch, R.; Vitacolonna, M.; Rudolf, R.; Reischl, M.

2022. Current Directions in Biomedical Engineering, 8 (2), 305–308. doi:10.1515/cdbme-2022-1078

Bruch, R.; Scheikl, P. M.; Mikut, R.; Loosli, F.; Reischl, M.

2021. SLAS technology, 26 (4), 367–376. doi:10.1177/2472630320977454

Bruch, R.; Rudolf, R.; Mikut, R.; Reischl, M.

2020. Current directions in biomedical engineering, 6 (3), Art.Nr. 20203103. doi:10.1515/cdbme-2020-3103

Bruch, R.; Rudolf, R.; Mikut, R.; Reischl, M.

2020. 54th Annual Conference of the German Society for Biomedical Engineering (DGBMT 2020), Online, September 29–October 1, 2020